Designing with Extended Intelligence

In this course we understood the background and fundamentals of machine learning and AI and created out own ML model to accomplish a task.

Artificial Intelligence Background

The last two weeks aligned thematically to bring both an inspiring and critical look into artificial intelligence, machine learning, and decentralised computing from a number of perspectives. We started with a provocation from Kenric McDowell who shared with us his work and personal experience on co-authoring a book with an AI language model, GPT-3. Kenric’s experience writing the book Pharmako-AI was presented as a conversational process between human and machine but also heavily dependent on Kenric’s curation of GPT-3s outputs. This was a great way to open my exploration into AI because it immediately led to a consideration of what intelligence really implies and on the relationship and dependence between the machine on the human in both directions being a much more nuanced topic to explore. I was interested in Mariana’s take on the project, referring to the use of GPT-3 as a way to harness the power of all collective human knowledge up to this point. On the other hand, it’s interesting to think about how the machine can only harness what it is or isn’t fed in the first place, perhaps like the human mind as well, which made me reflect on the relationship between something or someone being all-knowing and all-repeating. If a machine or person is tasked to make judgements, and they don’t get access to all the information in the first place, then those judgements will be biased to the scope of the input data. This is something we continued to realise more as we delved deeper into how machine learning works.

In the continued conversation with Jose Luis on the Atlas of Weak Signals, I saw the impact and implications of commercialised AI and how it is being utilised by companies to achieve more with less, namely, less humans. It was refreshing and powerful to then transition into a conversation about society and wealth distribution and consider that the use of AI to replace work doesn’t have to result in joblessness but job-needlessness. As with all the topics in the atlas, nothing is isolated and without strong relationships to other systems but its our duty to reinforce those connections so things don’t get overly defined on their own. This once more ends up being a conversation about the ruling powers and about the significance of the voice of the masses. If companies are able to automate all work, will they be willing to distribute more wealth? If they don’t distribute wealth, and their customers will have no capital to buy, what is their purpose? If they don’t want to distribute more wealth, maybe they shouldn’t automate in the first place? There are no specific answers here but its interesting to explore these connections and break free from the ‘proof-of-concept’ aspect of new technology and jump to the implications at scale.

Using Machine Learning

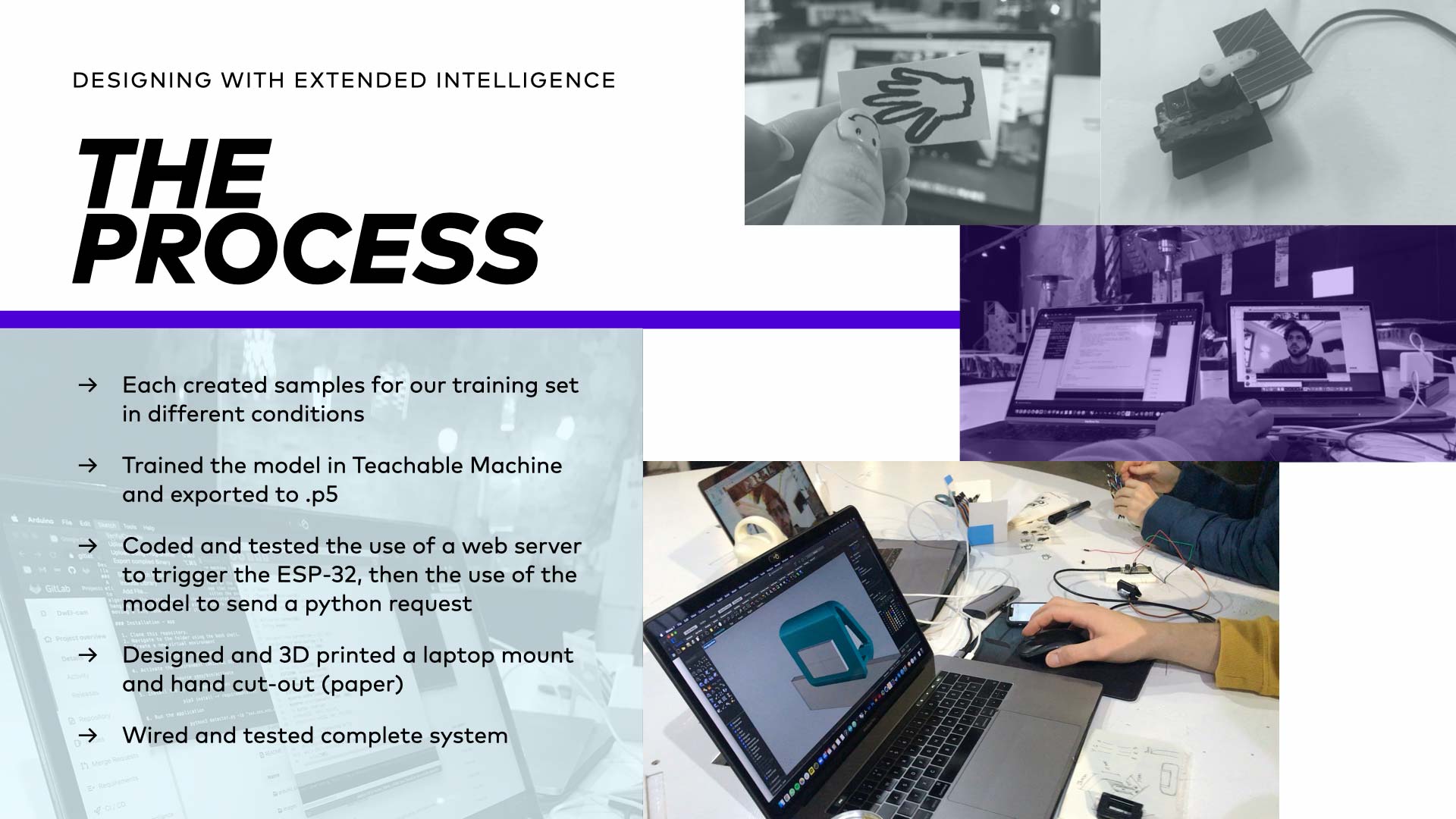

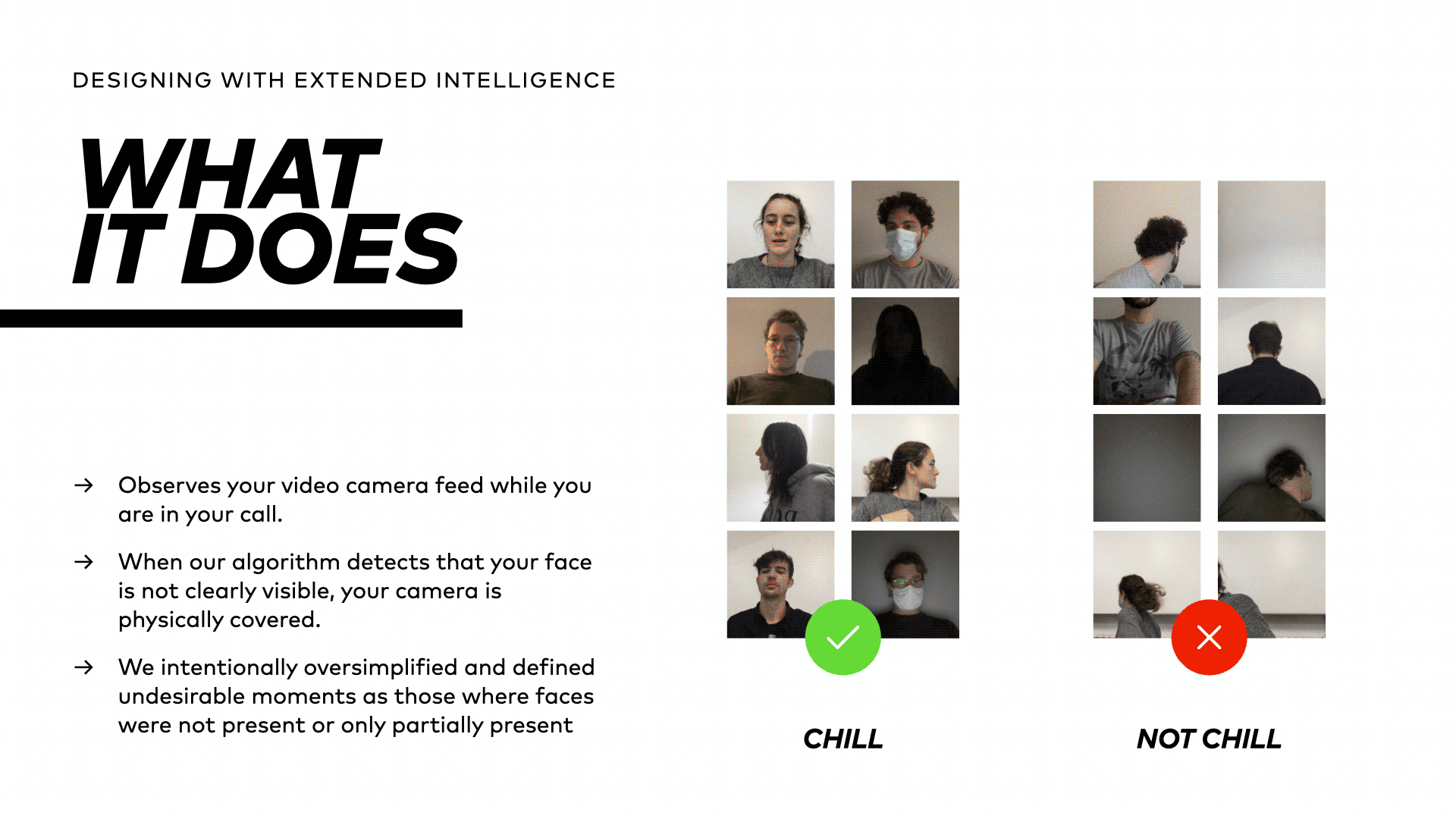

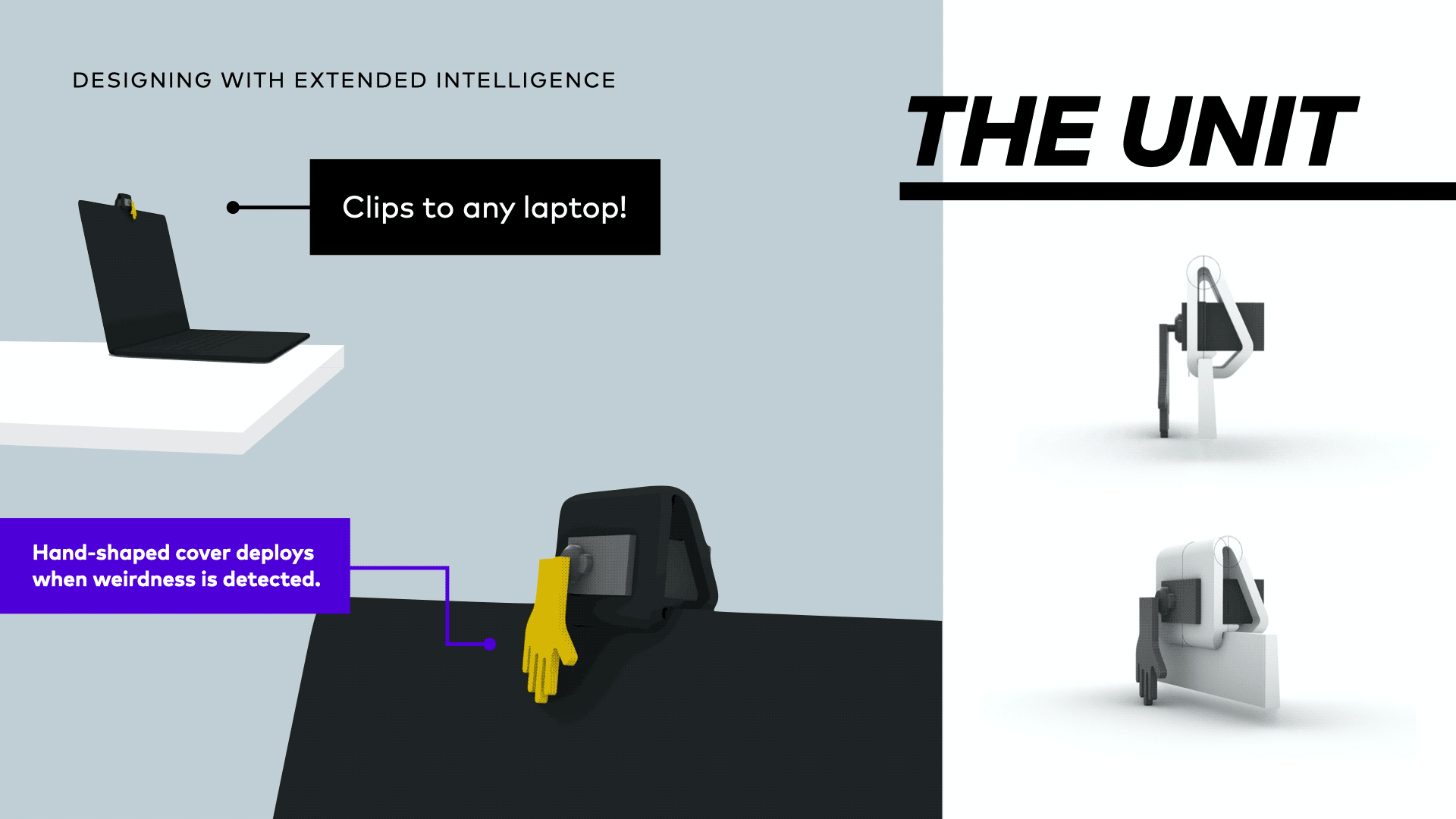

In our Designing for Extended Intelligence class I had the chance to create my first AI model and work with my team to trigger a somewhat practical physical device with computer-vision. It was clear that this course was difficult for the entire class, and perhaps was a culmination of issues that had been building up over the last few weeks: confusion about in-person versus remote sessions, limited hands-on support, and unclear expectations and direction around how to apply the subject matter. In the end, I know I got a lot out of it but it does raise the question of whether we are being over-exposed to details of certain disciplines without fully understanding the prerequisites and foundations that led up to their development. The teaching style might also be worth exploring further for these more abstract topics. It was very satisfying once more to work with Pietro, Morgie, Márk, and Anaïs to create a functioning thing, this time with machine learning. Using Google’s Teachable Machine library, we generated a model to distinguish between people being ‘present’ or ‘not present’ in their video feed, actuating a servo-powered hand-shaped lens cover to block their camera if deemed ‘not present.’

The resulting product is a somewhat useful device that worked surprisingly well but raised obvious questions about what kind of bias our limited training might have embedded into our device if marketed as a working solution to a problem. Unlike the physical computing and basic programming work we experienced previously, we did not fully dissect the concepts of machine learning on our own. The use of model generators and libraries already created acted as an accelerator for us to begin creating practical uses for ML-tech, but also resulted in the dependence on a kind of black-box that we don’t fully understand the workings of (nor the motivation of a company like Google to make these types of resources available). It was obvious that I and all the classmates were most interested in the ethical questions around ML and AI and that we hope to dig into that more soon.